In the last post, we discussed the basics of caching. In this post, I would like to recommend the adoption of a convenient abstraction that can help us to identify the best approach for caching for different contexts.

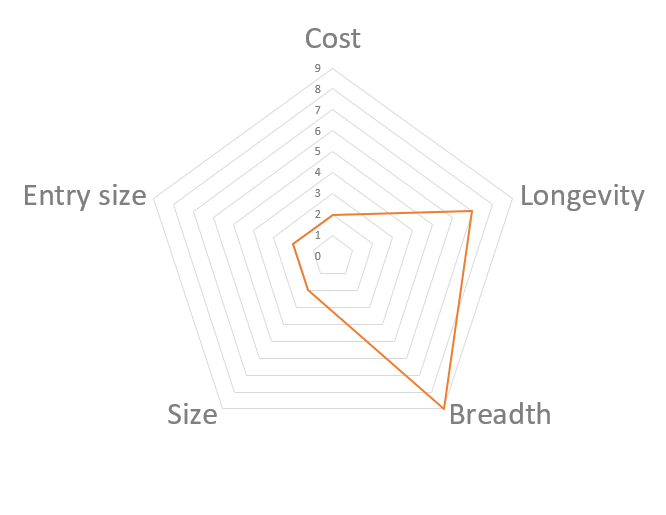

The abstraction I recommend here was inspired by an excellent Plurasight course, authored by Elton Stoneman (the course is a little bit outdated, but it is still relevant). Originally, Stoneman recommends us to take in consideration four different aspects about the data we need to cache: cost, breadth, longevity, size.

Being pragmatic, Stoneman suggests attributing a value between 1 and 10 for each type of data we are considering to cache and then plotting the result in a radar chart.

From my perspective, I would still recommend a fifth aspect: the size of each cached item (entry size).

The five aspects in detail

Let’s dig in each aspect mentioned above.

Cost

What is the cost of fetching data from the source? Initially, Stoneman emphasizes expensive database queries. But, today, we could amplify the idea considering the costs (in terms of money and time) and the availability of getting data from external APIs.

The higher the cost of obtaining the data, the more relevant it will be to adopt an excellent caching mechanism.

Breadth

How frequently would the data be used? The more frequent the use of a piece of data, the higher the benefits of caching.

In web applications, often twenty percent of the routes receive eighty percent or more of the requests. If there is data that is necessary for handling the majority of these requests, then caching it could improve the performance perceptibly.

Longevity

How long the data stays fresh? Since the application should rarely be serving stale or invalid data to the users, invalidating cache content is crucial. If it occurs frequently, the benefits of the caching will not be perceived and, sometimes, we would get an undesirable overhead in performance due to the cache management.

The longer the data stays fresh, the better it is for caching.

Size

How much memory would we need to deal with a data type efficiently? Are we talking about kilobytes, megabytes, gigabytes?

The more significant is the amount of memory needed, the more relevant are considerations about the infrastructure, and, eventually, about the cache entries replacement strategy.

Entry Size

What would be the size of each entry in a specified data type? Would it be small or large?

Big objects can be costly to serialize and to move across the network.

Architectural implications

Considering these five aspects, here are some architectural implications:

- Whenever it is possible, we should adopt a client-side cache. It reduces latency and reduces the pressure on the server-side;

- For .NET applications, In-process/In-memory cache is perfect for scenarios when: the data rarely (or never) changes, the data is frequently used, needs little memory, and each cache entry is smaller than 85Kb;

- An in-process cache is not a good idea when dealing with large amounts of memory;

- Using a server-side shared cache store can reduce the pressure over the original data source and improve the perceived performance;

- Using a server-side shared cache store is generally a better approach when dealing with big size caches;

- Using a server-side shared cache store generally makes easier the invalidation process;

- Depending on how frequent a type of data is used, it can be interesting to implement two cache levels reducing the pressure over the network;

- DO NOT implement your own caching store.

There are a lot more, …

That’s all I have to say … for a while

In this post, I touched some fundamental aspects to take in consideration when defining a caching strategy. In the next posts, let’s explore how to implement some cache strategies.

Do you consider some other important aspect that I ignored here? Share your thoughts in the comments.

Again: [tweet]Performance is a feature. Designing good solutions and writing good code is a habit.[/tweet]